秉持尊重学生和家长隐私的原则,以下所展示的图片,都已经经过确认可以放到公开媒介。

还有N多学术素材,由于知识产权或家长原因,不便公开,敬请理解。

本文作者MA同学,来自一所加州,11年级学生,祖籍大陆,第二代移民。梦想着进入美国名校读计算机专业,为此,参加美国名校科研,增加学术背景,开拓视野,获得真知。通过美国名校科研老师的指导,在2017年的暑假申请到了加州大学伯克利分校计算机专业的科研机会。下文是学生在科研学习的过程中所写。供学生家长参考。

Research Program Summary

At August I had the honor to participate in a research based program. In this program, I had an opportunity to learn about data analysis and machine learning. In the following summary will be the detailed report of this program. What I can said is that, I learn many things from this program and this will be an extremely valuable experience in my life.

在8月我有幸参加了一个关于计算机的研究项目。在这个过程中,我有了一个学习数据的机会,分析与机器学习。在下面的摘要中将是这个程序的详细报告。我能说的是,我学到了很多。从这个项目的东西,这将是一个非常宝贵的经验,在我的生活中。

STEP ONE

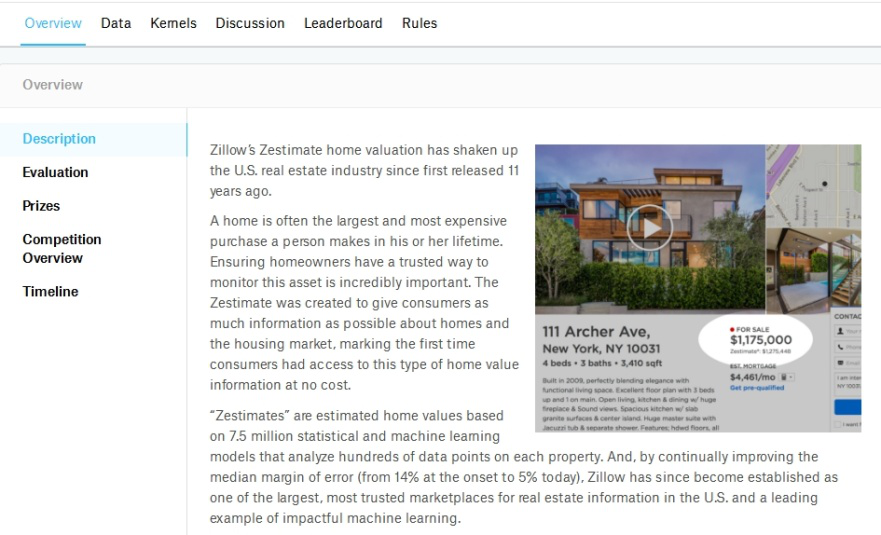

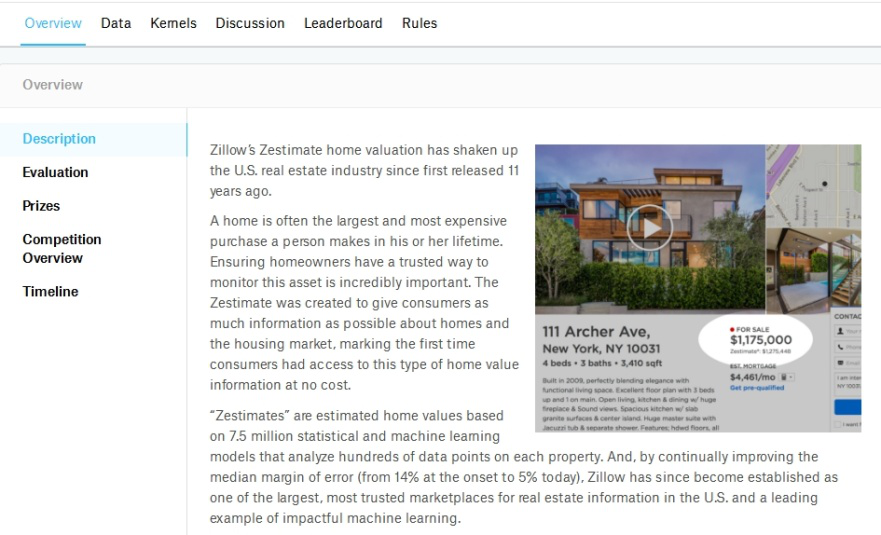

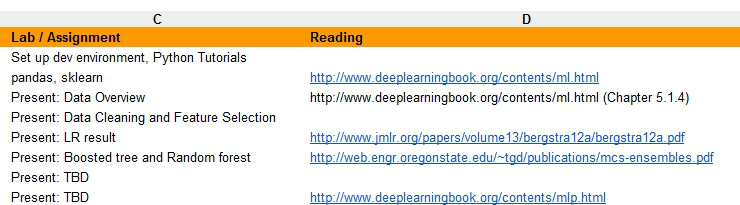

At the start of the first week, we have a brief introduction to our fellow program participant: a senior (high school) from Los Angeles, a sophomore (high school) from Minnesota, and the mentor of our program-Dr.C, PHD of UC Berkeley. In the first meeting, Dr.Chang elaborate the basic definition of machine learning and the project we are going to do. The project we about to challenge is a kaggle competition, which we need to create a model that can predict the real estate price’s error. At the first glance, the project seems like mission impossible, but Dr.C has already have a plan to help us to accomplish this goal.

在第一周的开始,我们简要介绍了我们的同伴项目参与者:洛杉矶的一位高中生和明尼苏达的一位高中生,和我们的科研导师C,来自加州伯克利大学。在第一次会议上,C导师阐述机器学习的基本定义和我们将要做的项目。这个项目我们要挑战的是Kaggle竞争,我们需要建立一个模型,可以预测房地产价格的错误。乍一看,这个项目好像是不可能的任务,但C博士已经有计划帮助我们实现这个目标。

Here is the link on our project and schedule:

这里是我们的项目和时间表的链接:

Project link:

编者略

Lecture for the introduction of ML:

编者略

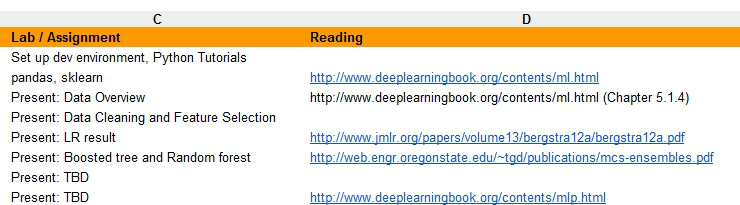

The assignment we need to do is to read machine learning basics (chapter 5), and python environment setup. (the computer language we use for our project is python)

我们需要做的任务是阅读机器学习基础知识(第5章)和Python环境设置。(计算机语言,我们的项目使用的是Python)

After the setup, Dr.C walks us through the procedures of making the prediction model. First we need to import the necessary modules. The modules include: numpy, scikit learn, matplotlib, panda and csv files, which allow us to use functions that are pre-build in these modules.

在设置之后,C导师带领我们完成了预测模型的制作过程。首先,我们需要导入必要的模块。该模块包括:NumPy,scikit学习,matplotlib,panda和CSV文件,它允许我们使用预先建立的这些功能。

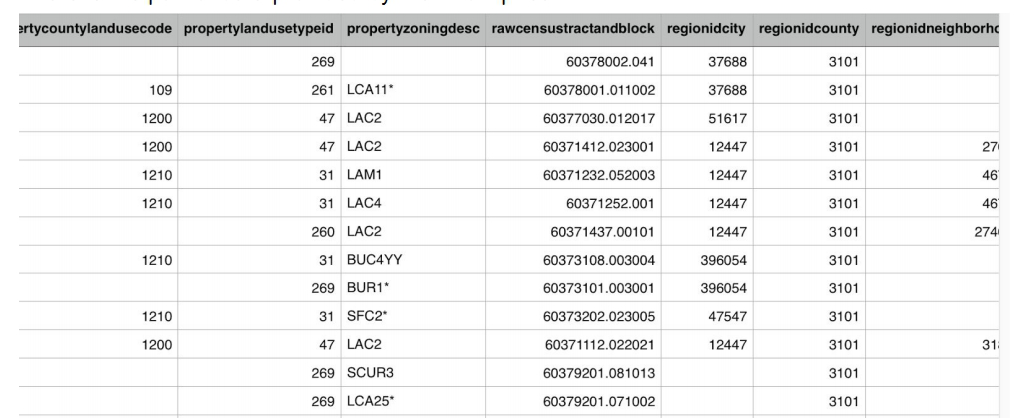

The first thing we need to do is to import the data. While the csv is imported, we can proceed to clean the data. The significance of the data cleaning is to make sure the accurateness of our data. As we load the data into our model, we want the model to give valid prediction, which required a good training set for the computer to learn the function.

我们需要做的第一件事是导入数据。在导入CSV时,我们可以继续清理数据。数据的意义,清洗是保证我们数据的准确性。当我们将数据加载到模型中时,我们希望模型提供有效的预测,这需要一个很好的计算机学习功能的训练机会。

The process of data cleaning was not easy for us. The reasons for this is we do not have enough background knowledge nor experience with python and machine learning. With help of Dr.C, we managed to overcome the obstacles and finish the data cleaning.

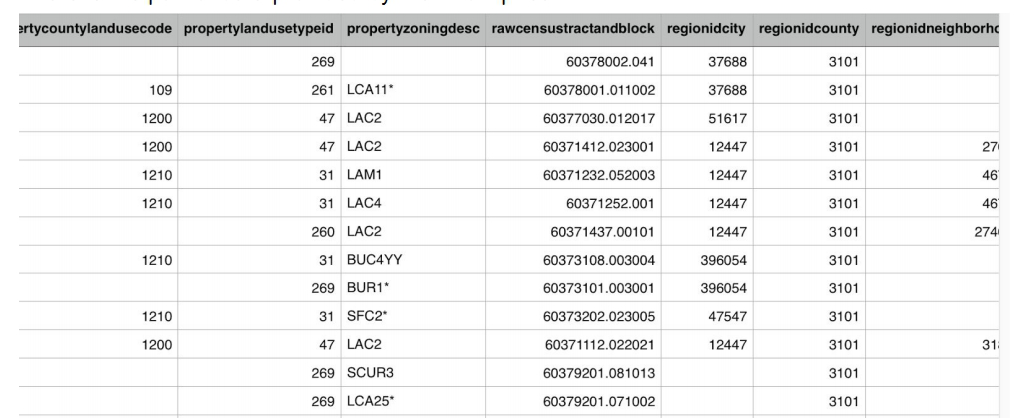

Here is the part of data provided by the zillow price:

数据清理的过程对我们来说并不容易。究其原因,是我们没有足够的背景知识和经验。使用python和机器学习。在C博士的帮助下,我们克服了障碍,完成了数据清理工作。这里是由Zillow的价格提供数据的一部分:

Modules that we used:

我们使用的模块:

http://www.numpy.org/

http://pandas.pydata.org/

STEP Two

After the data cleaning process, we need to import the necessary model or algorithm to help the computer create a predictive function, the first function we apply is a linear regression module from scikit learn. What the linear regression do is from all the training set it will draw a linear function to fits the x inputs and y outputs.

Here is the what the code looks like:

在数据清理过程之后,我们需要导入必要的模型或算法,以帮助计算机创建预测函数。第一个函数将从scikit线性回归模块学习。线性回归是从所有的训练集中得出的。适合x输入和y输出的线性函数。下面是代码的样子:

from sklearn.linear_model import LinearRegression

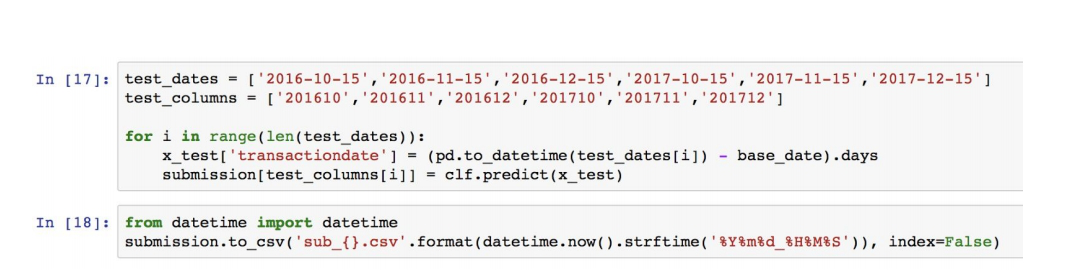

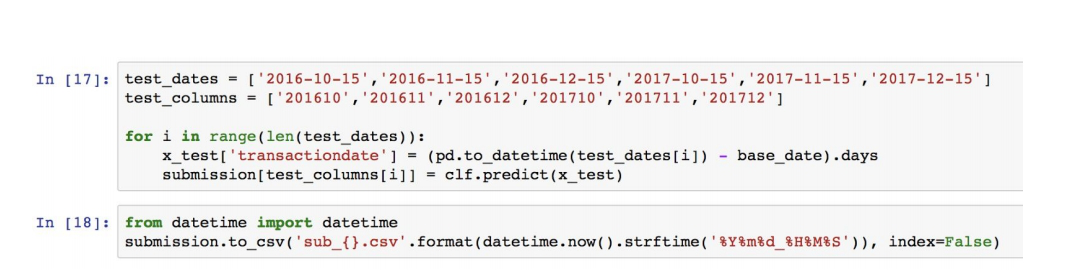

After the computer gave the results, we put the results into the csv format and submit to the competition:

Well, the ranking for my submission is not that great using the linear regression, it place me around 1250th, so that is when the boosted trees comes in.

Noted that many of this algorithms and functions are based on mathematical principles, as Dr.C taught us the math behind machine learning.

嗯,我的排名不是很好,用线性回归,它把我放在第一千二百五十左右,所以就是在提升的时候,困难来了。注意到许多算法和函数基于数学原理,正如C博士教我们的机器背后的数学学习。

Boosted trees is a machine technique for regression and classification problems, which produces a prediction model in the form of an ensemble of weak prediction models, typically decision. It builds the model in a stage-wise fashion like other boosting methods do, and it generalizes them by allowing optimization of an arbitrary differentiable cost function.

增强树是一种用于回归和分类问题的机器技术,它以一种形式产生一个预测模型。弱预测模型的集合,典型决策。它像其他增强方法一样,以一种阶段性的方式构建模型,并且通过允许任意可微代价函数的优化推广它们。

The boosted tree algorithm that we first adapted is xgboost, short for “Extreme Gradient Boosting”. We believe that if we use xgboost instead of linear regression, we can get a better model.

提高树算法首先适应的是xgboost,“Extreme Gradient Boosting”。我们相信,如果我们使用xgboost而不是线性回归,我们可以得到一个更好的模型。

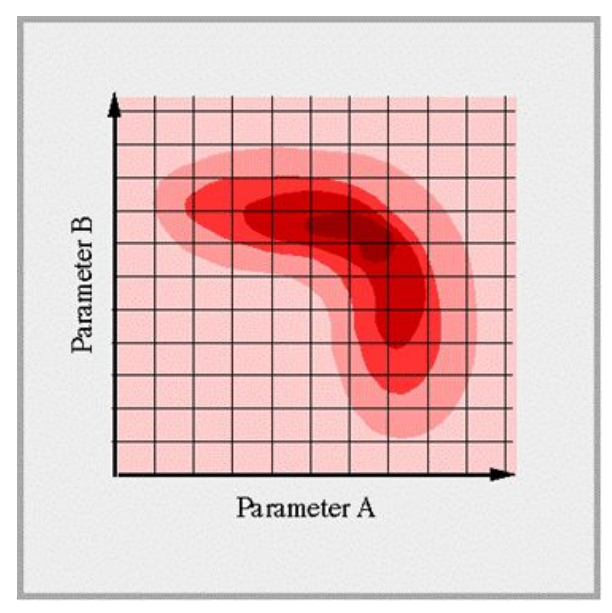

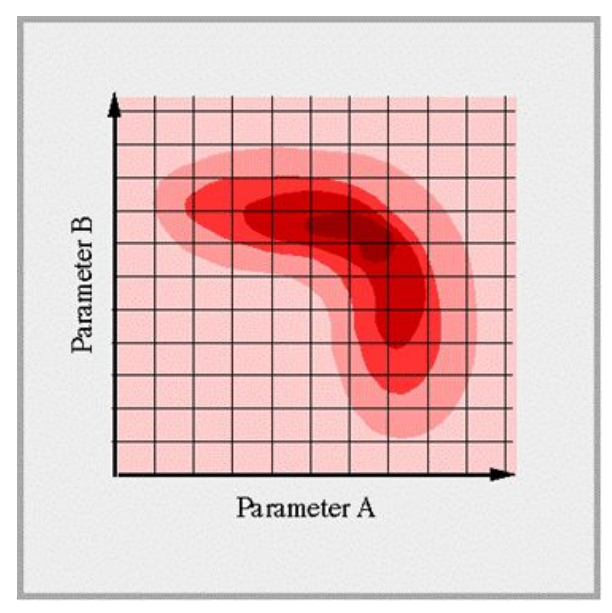

To use xgboost, there is couple hyperparameters that we need to tune: learning rate (steps that the computer take when performing gradient descent), max depth (control/prevent the overfitting), and subsample (also control overfitting or underfitting, fraction of observation that are randomly samples.) In order to tune for the best hyperparameter, another concept need to be apply: grid search and random forest search.

使用xgboost,有几个参数,我们需要调整:学习速率(步骤:电脑在执行梯度下降),最大深度(控制/防止过拟合),和子样本(同时控制过度拟合或underfitting,分数观察是随机样本。)为了调整最佳的超参数,另一个概念需要申请:网格搜索和random forest search。

Grid search and random forest search can be put this simply, the specific type of algorithm that help the us identify the best hyperparameters to use.

Here is what a grid search looks like:?

网格搜索和random forest search可以简单地说,特定类型的算法,帮助我们确定最好的。参数使用。下面是网格搜索的样子:

By using the grid search, we able to find the best parameter, as I apply the xgboost as my module, my ranking went from 1200 ish to 700 ish. We can see the significance change due the change of model.

参数采用网格搜索,我们能找到最好的参数,我将xgboost作为我的模块,我的排名就从1200左右到700。从模型的变化可以看出意义的变化。

Currently the model I’m using is 丨ightBGM, recommend by Dr.C , the model is more efficient and accurate than xgboost. The process is not easy to us beginners, but I believe that there is a lot of things we can learn from this process, and can be handy to us in the futures.

目前我使用的模型是丨ightbgm,推荐由C老师,该模型比xgboost更加高效和准确。这个过程对我们初学者来说并不容易,但我相信我们可以从这个过程中学到很多东西,并且可以在我们手边得心应手。

Links:

编者略

Afterthoughts and Conclusion.

Before joining this program, I basically have zero ideas about research based computer science. Whether is the atmosphere or learning materials, I have never have the opportunity to experience before this program. I truly enjoy these several weeks of brainstorming and coding. I believe that I have become better in problem solving and analysis. Here I have to give special thanks to Dr.C, who guide us through this entire program, and help us steps by steps whenever we’re in trouble. After this program, I become more determined to major in computer science and even computer science engineer. I wish I can continued to develop my skills in programming and become more intellectual as I learn more about machine learning, participating this program definitely expand my perspective on computing and algorithm that modern technology capable of, it also deepen my understanding to STEM research and carrying out advance project.

At the end of the note, I want to thanks to my fellow participants who have help me whenever I ran into confusion and problems, and Dr.C for teach us the basics of machine learning. Pictures that commemorates the program.

在加入这个计划之前,我对研究计算机科学基本上没有什么想法。无论是氛围还是学习材料,我从来没有机会体验这个科研之前。我真的很享受这几周的头脑风暴。编码。我相信我在解决问题和分析方面已经变得更好了。在这里,我要特别感谢指导我们的C导师。通过这整个计划,并帮助我们一步一步地在我们遇到麻烦的时候。这个科研结束后,我更加决心主修计算机科学,甚至计算机科学工程师。我希望我能继续发展我的编程技能,并成为更多的知识,我学到更多的机器学习,参与这个计划肯定扩大我的角度计算和现代技术所能达到的算法,也加深了我对STEM研究和实施先进项目的理解。结束时,我要感谢我的同伴们,每当我遇到困惑和问题时,他们都会帮助我。C导师教我们机器学习的基本知识。